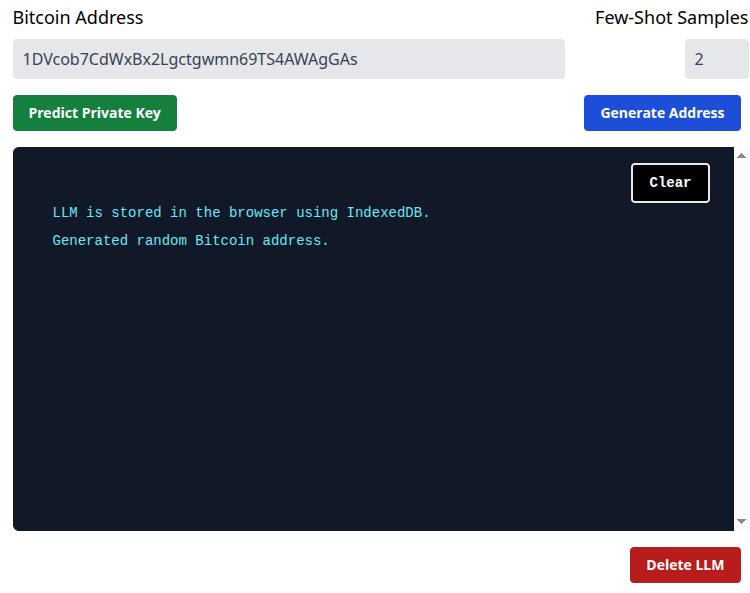

Using On-Device LLMs to Predict Bitcoin Private Keys

Causal Large Language Models predict the next token in a sequence of previous tokens. We give an LLM a bitcoin public address and expect it to predict the secret private key that generated that address.

How it works

The LLM that is used is the Danube3 500 million parameter base model by H20. It has been converted to GGUF format and quantized to 4 bits to reduce it's size to 318mb. It runs entirely in the browser, on the device.

Emscripten was used to build llama.cpp as a static WebAssembly library (with SIMD and multi-thread support). This handles all the math operations in the layers of the model and is configured to use flash attention.

The inference code is written in c++ and compiled to WebAssembly and javascript with the llama.cpp library using Emscripten. Embind is used to bind C++ classes to javascript classes, which reduces developer code. The proxy.h API is used with ASYNCIFY and PROXY_TO_PTHREAD flags to run the code asynchonously in one or more web workers. This stops the main javascript event loop being blocked by the heavy compute occuring when inferencing the model, which allows the UI to remain responsive.

The web app is constucted using Angular and Tailwind CSS.

A random Bitcoin address is generated (or provided by the user). The address can use any of these formats:

- Public Key Hash (begins "1")

- Script Hash (begins "3")

- Witness Public Key Hash (begins "bc1q")

- Taproot (begins "bc1p")

The GGUF model file is downloaded from Hugging Face and kept in the browser IndexedDB store (using Dexie.js).

The model file is passed to the WebAssembly module which loads it into RAM using llama.cpp.

A prompt for the LLM is generated with "few-shot" examples. Each example is on one line and contains the Bitcoin address and the corresponding base58 encoded private key. The final line contains only the target Bitcoin address. The expectation is that the LLM will predict the private key tokens that are absent for the target but present for the examples.

This is similar in concept to Retrieval Augmented Generation (RAG) which expands the context of the prompt by providing external knowledge which was not present in the training data. The extra context in a RAG system is typically retrieved from a database (often using ANN such as HNSW). For this system we generate random keys and addresses instead of finding similar ones (reasons why below).

The prompt is given to the model, which predicts the most likely token that would appear next in the sequence. This is repeated until 44 characters have been generated, which is the length of a 256 bit private key that has been base58 encoded.

The generated candidate private key is then used to derive a Bitcoin address, which is compared to the target address to see if the LLM predicted correctly.

Don't expect to find Private Keys

LLMs are effective because they are trained on data that is inherently predictable. Even though there are a seemingly infinite ways to combine words into sentences, the languages we use have proven successful because we have restricted which words are used together and we've built grammatical patterns so that it is easier for us to remember how to use the words effectively. LLMs are effective at identifying these underlying patterns.

Bitcoin addresses however, have been specifically designed to avoid predictable patterns. They are created using cryptographic algorithms that apply an avalanche effect where one small change in the private key causes a dramatic change in the address. They are inherently unpredictable by design.

An LLM will not predict private keys because there are no patterns in the tokens of the Bitcoin addresses or keys.

Even if an LLM was trained with hundreds of trillions of tokens of Bitcoin addresses and private key data, the neural network would not converge because it would be unable to find temporal patterns between the tokens.

Why make it then?

To demonstrate that LLMs can predict text that looks plausible, even with data that is not predictable, but it should not be mistaken as being correct or valuable.

To demonstrate that (not so large) LLMs can run on device, in the browser.

There are other domains where temporal patterns do exist within the data where this technique may be applied effectively. Possibly enhanced with RAG or fine-tuning.

Possible Improvements

Increase the number of few-shot samples in the prompt.

Trim the LLM vocabulary to only include tokens that are a single character. The token We is treated differently to the tokens W and e.

Using a wider and deeper LLM such as LLama 3 8B/70B or Falcon 180B may result in better predictions. Scaling up models has shown unexpected emergent abilities.

Fine-tune the LLM using a dataset of bitcoin addresses and private keys (although the loss will probably not reduce and can be prone to catastrophic forgetting)

LLM browser demo

LLM browser demo